Abstract—In visual robot self-localization, graph-based scene

representation and matching have recently attracted research interest as robust

and discriminative methods for self-localization. Although effective, their computational and

storage costs do not scale well to large-size environments. To alleviate this

problem, we formulate self-localization as a graph classification problem and

attempt to use the graph convolutional neural network (GCN) as a graph

classification engine. A straightforward approach is to use visual feature

descriptors that are employed by state-of-the-art self-localization systems,

directly as graph node features. However, their superior performance in the

original self-localization system may not necessarily be replicated in

GCN-based self-localization. To address this issue, we introduce a novel

teacher-to-student knowledge-transfer scheme based on rank matching, in which

the reciprocal-rank vector output by an off-the-shelf state-ofthe-art

teacher self-localization model is used as the dark knowledge to transfer.

Experiments indicate that the proposed graph-convolutional self-localization

network (GCLN) can significantly outperform state-of-the-art self-localization

systems, as well as the teacher classifier. The code and dataset are available

at https://github.com/KojiTakeda00/Reciprocal rank KT GCN.

Members: Tanaka Kanji, Takeda

Koji, Kurauchi Kanya

Relevant

Publication:

Bibtex source, Document

PDF

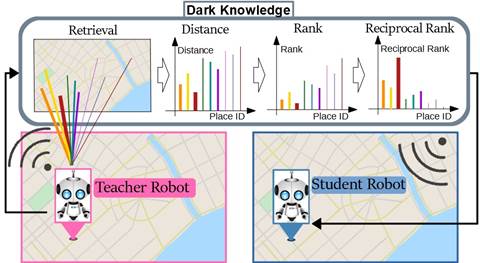

Fig. 1. We propose the use of the

reciprocal-rank vector as the dark knowledge to be transferred from a

self-localization model (i.e., teacher) to a graph convolutional

self-localization network (i.e., student), for improving the self-localization

performance.

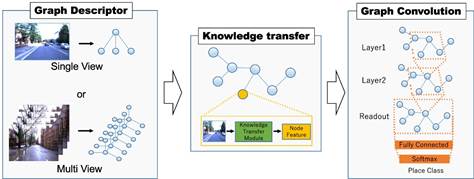

Fig.

2. System architecture.

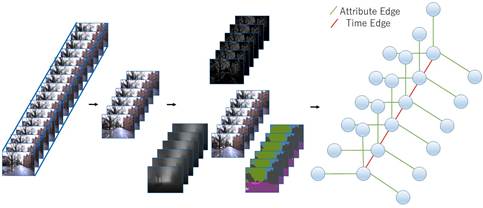

Fig.

3. Single-view sub-image-level scene graph

(SVSL).

Fig.

4. Multi-view image-level scene

graph (MVIL).

Fig.

5. Knowledge transfer on node feature

descriptor.

TABLE I

STATISTICS OF THE DATASET.

|

date |

weather |

#images |

detour |

roadworks |

|

2015-08-28-09-50-22 |

sun |

31,855 |

~ |

~ |

|

2015-10-30-13-52-14 |

overcast |

48,196 |

~ |

~ |

|

2015-11-10-10-32-52 |

overcast |

29,350 |

~ |

◦ |

|

2015-11-12-13-27-51 |

clouds |

41,472 |

◦ |

◦ |

|

2015-11-13-10-28-08 |

overcast, sun |

42,968 |

|

|

~ ~

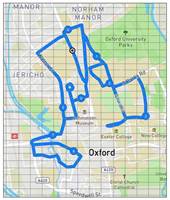

Fig.

6. Example of place

partitioning.

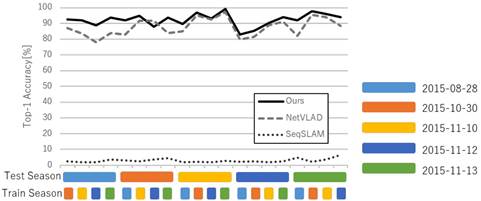

TABLE II AVERAGE TOP-1

ACCURACY.

|

Method |

Average

Top-1 Accuracy |

|

Ours |

92.4 |

|

NetVLAD |

87.9 |

|

SeqSLAM |

2.9 |

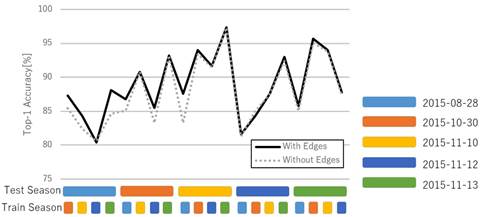

TABLE III

PERFORMANCE RESULTS VERSUS THE GRAPH

STRUCTURE.

|

Method |

Average

Top-1 accuracy |

|

Attribute

edges and Time edges |

92.3 |

|

w/o

all edges |

89.0 |

|

w/o

attribute edges |

91.5 |

|

w/o

time edges |

88.7 |

|

w/o

attribute node/edge |

91.7 |

TABLE IV

PERFORMANCE RESULTS FOR DIFFERENT

COMBINATIONS OF K AND

IMAGE FILTERS.

|

number

of nodes |

combination |

Average

Top-1 accuracy |

|

k=2 |

canny |

92.1 |

|

depth |

91.8 |

|

|

semantic |

92.3 |

|

|

k=3 |

canny-depth |

92.0 |

|

canny-semantic |

92.4 |

|

|

depth-semantic |

92.1 |

|

|

k=4 |

canny-depth-semantic |

92.3 |

|

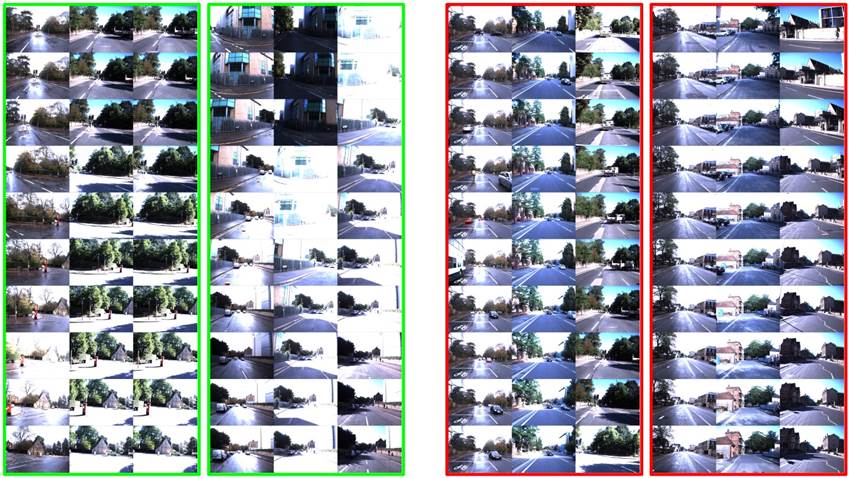

Fig.

7. Example

results. From left to right, the panels show the query scene, the top-ranked

DB scene, and the ground-truth DB scene. Green and red |

bounding boxes indicate gsuccessh

and gfailureh examples, respectively.

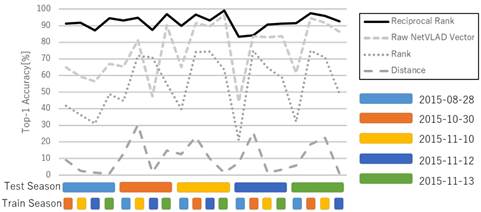

Fig. 8. Performance

results for single-view scene graph with and without edge connections.

Fig. 9. Performance results for different training and

test season pairs.

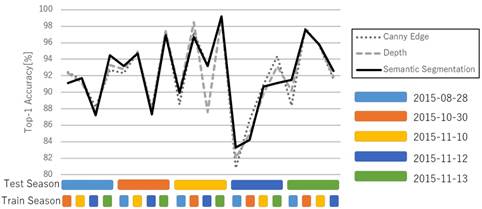

Fig. 10. Performance results versus

the choice of attribute image descriptors (K=2).

Fig. 11. Performance

results for individual training/test season pairs (K=2).